The Problem of Popularity

Imagine you've just launched a promising new web application. Perhaps it's a social platform, an e-commerce site, or a media streaming service. Word spreads, users flood in, and suddenly your single server is struggling to keep up with hundreds, thousands, or even millions of requests. Pages load slowly, features time out, and frustrated users begin to leave.

This is the paradox of digital success: the more popular your service becomes, the more likely it is to collapse under its own weight.

Enter load balancing—the art and science of distributing workloads across multiple computing resources to maximize throughput, minimize response time, and avoid system overload.

What Exactly is Load Balancing?

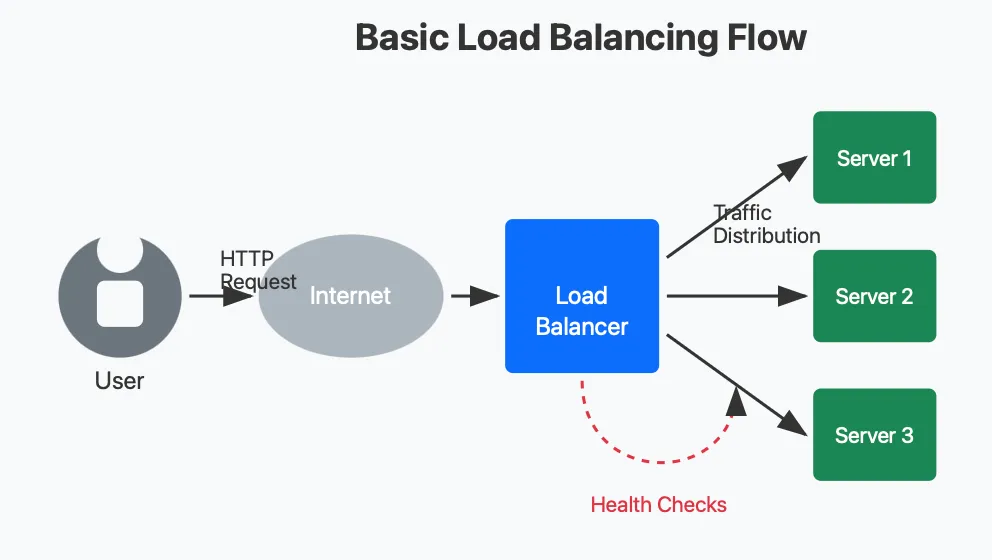

At its core, load balancing is a traffic management solution that sits between users and your backend servers. When a user requests access to your application, the load balancer intercepts that request and directs it to the most appropriate server based on predetermined rules and real-time server conditions.

Think of it as an intelligent receptionist at a busy medical clinic. As patients arrive, the receptionist doesn't simply send everyone to the same doctor. Instead, they consider which doctors are available, their specialties, current workloads, and even patient preferences before making an assignment. The goal is to ensure patients receive timely care while preventing any single doctor from becoming overwhelmed.

In technical terms, load balancing achieves three critical objectives:

Service Distribution: Spreads workloads across multiple servers

Health Monitoring: Continuously checks server status and availability

Request Routing: Directs traffic according to optimized algorithms

The Traffic Flow: How Load Balancing Works

To understand load balancing, let's walk through what happens when a user accesses a load-balanced website:

Request Initiation: A user types your domain name (e.g., www.yourservice.com) into their browser or clicks a link to your application.

DNS Resolution: The domain name resolves to the IP address of your load balancer (not your individual servers).

Load Balancer Reception: The load balancer receives the incoming request.

Server Selection: Based on its configured algorithm, the load balancer selects the most appropriate server to handle the request. This might be the server with the fewest active connections, the fastest response time, or simply the next server in a rotation.

Health Check Verification: Before forwarding the request, the load balancer verifies that the selected server is operational through health checks.

Request Forwarding: The load balancer forwards the request to the chosen server.

Response Processing: The server processes the request and sends a response back to the load balancer.

Client Delivery: The load balancer returns the response to the user's browser.

This entire process happens in milliseconds, creating a seamless experience for the user who remains unaware of the complex orchestration happening behind the scenes.

The Benefits of Load Balancing

Load balancing offers numerous advantages that extend far beyond simply handling more traffic:

1. High Availability (HA)

Perhaps the most critical benefit of load balancing is ensuring your service remains available even when individual components fail. If a server crashes or becomes unresponsive, the load balancer automatically redirects traffic to healthy servers, preventing service interruptions.

Real-world impact: For e-commerce platforms, this could be the difference between a successful Black Friday sale and millions in lost revenue due to site crashes.

2. Scalability

As your user base grows, load balancing allows you to add more servers to your infrastructure without architectural changes. Simply deploy new servers, add them to the load balancer's server pool, and they'll start receiving traffic.

Real-world impact: Netflix can instantly scale up server capacity when a popular new show releases, handling millions of simultaneous streams without degradation in quality.

3. Redundancy

Load balancers eliminate single points of failure in your infrastructure. With multiple servers handling the same workloads, the failure of any individual component won't bring down your entire service.

Real-world impact: Financial services can maintain 99.999% uptime (less than 5.26 minutes of downtime per year), essential for critical banking operations.

4. Flexibility and Maintenance

With load balancing, you can perform maintenance on individual servers without taking your entire service offline. The load balancer simply stops routing traffic to servers undergoing maintenance.

Real-world impact: SaaS providers can roll out updates during business hours without disrupting customer operations.

5. Enhanced Security

Many modern load balancers include security features like SSL termination, DDoS protection, and application firewalls that can filter out malicious traffic before it reaches your application servers.

Real-world impact: E-commerce sites can better protect sensitive customer data and maintain PCI compliance.

Load Balancing Algorithms: The Decision Makers

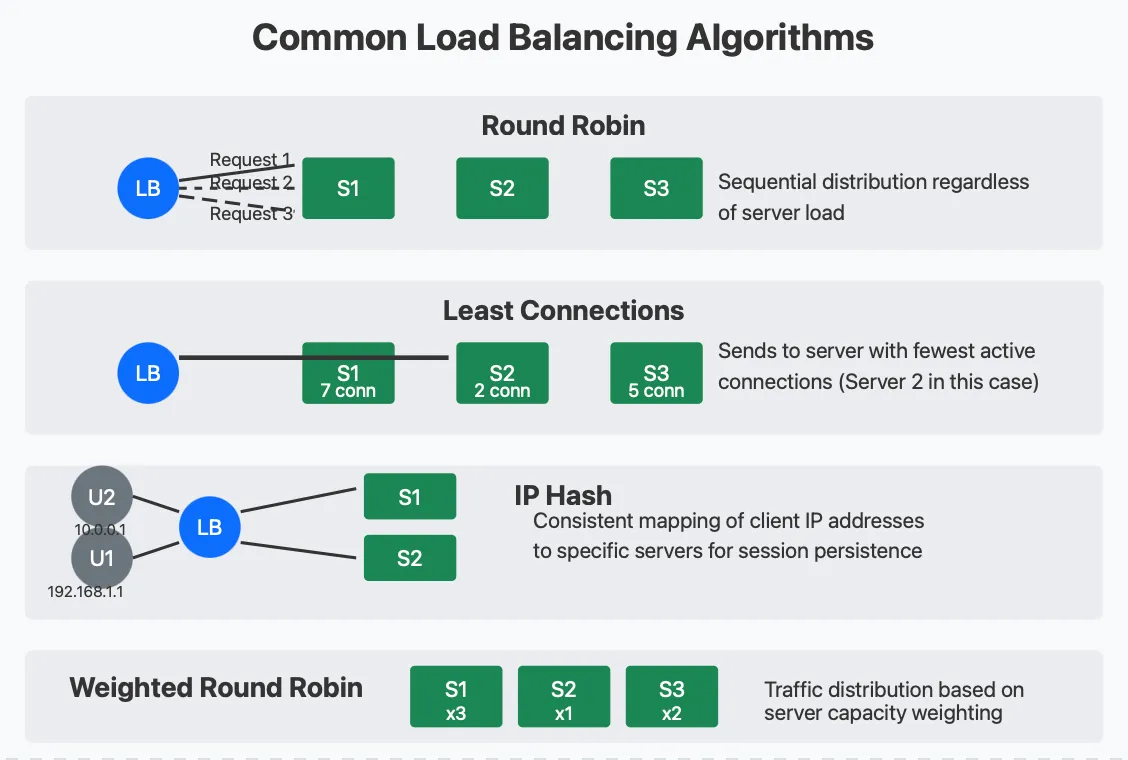

The intelligence of a load balancer lies in its algorithm—the logic it uses to decide which server should handle each incoming request. Let's explore some common algorithms:

Load Balancing Algorithms Visualization

1. Round Robin

How it works: The load balancer cycles through the list of servers in sequence. The first request goes to server 1, the second to server 2, and so on, cycling back to the beginning once all servers have been used.

Best for: Simple implementations where all servers have equal capacity and the request workload is fairly uniform.

Limitations: Doesn't account for varying server capabilities or request complexity.

2. Least Connections

How it works: The load balancer tracks the number of active connections to each server and routes new requests to the server with the fewest active connections.

Best for: Environments where requests may take varying amounts of time to process or where servers have different processing capabilities.

Limitations: Connection count alone doesn't always accurately reflect actual server load.

3. IP Hash

How it works: The load balancer creates a hash based on the client's IP address and consistently maps that hash to a specific server.

Best for: Applications that require session persistence, where the same user should consistently connect to the same server.

Limitations: Can lead to uneven distribution if many users share IP addresses (e.g., behind corporate firewalls).

4. Weighted Methods

How it works: Administrators assign different weights to servers based on their capacity. Servers with higher weights receive proportionally more traffic.

Best for: Heterogeneous environments with servers of varying capabilities.

Limitations: Requires manual configuration and adjustment as traffic patterns change.

5. Response Time

How it works: The load balancer monitors how long each server takes to respond to requests and favors the fastest-responding servers.

Best for: Performance-critical applications where minimizing latency is crucial.

Limitations: May cause oscillation as servers become slower when more heavily loaded.

6. Random with Two Choices

How it works: The load balancer randomly selects two servers and then chooses the one with fewer connections.

Best for: Large-scale deployments where checking all servers would be inefficient.

Limitations: Not as precise as checking all servers, but still effective and more scalable.

Types of Load Balancers

Load balancers come in different forms, each with its own strengths:

Hardware Load Balancers

Physical appliances specifically designed for load balancing.

Pros:

High performance

Purpose-built hardware

Often include specialized ASICs for SSL acceleration

Cons:

Expensive

Fixed capacity

Physical maintenance required

Popular products:

F5 BIG-IP

Citrix ADC (formerly NetScaler)

A10 Networks Thunder ADC

Software Load Balancers

Software applications that run on standard operating systems.

Pros:

Cost-effective

Flexible deployment options

Easy to scale

Cons:

Performance depends on underlying hardware

May require OS maintenance

Popular products:

NGINX Plus

HAProxy

Apache Traffic Server

Envoy

Cloud Load Balancers

Managed load balancing services provided by cloud platforms.

Pros:

No infrastructure to maintain

Pay-as-you-go pricing

Seamlessly scales with demand

Cons:

Less control over configuration

Potential vendor lock-in

Popular products:

AWS Elastic Load Balancing

Google Cloud Load Balancing

Azure Load Balancer

DigitalOcean Load Balancers

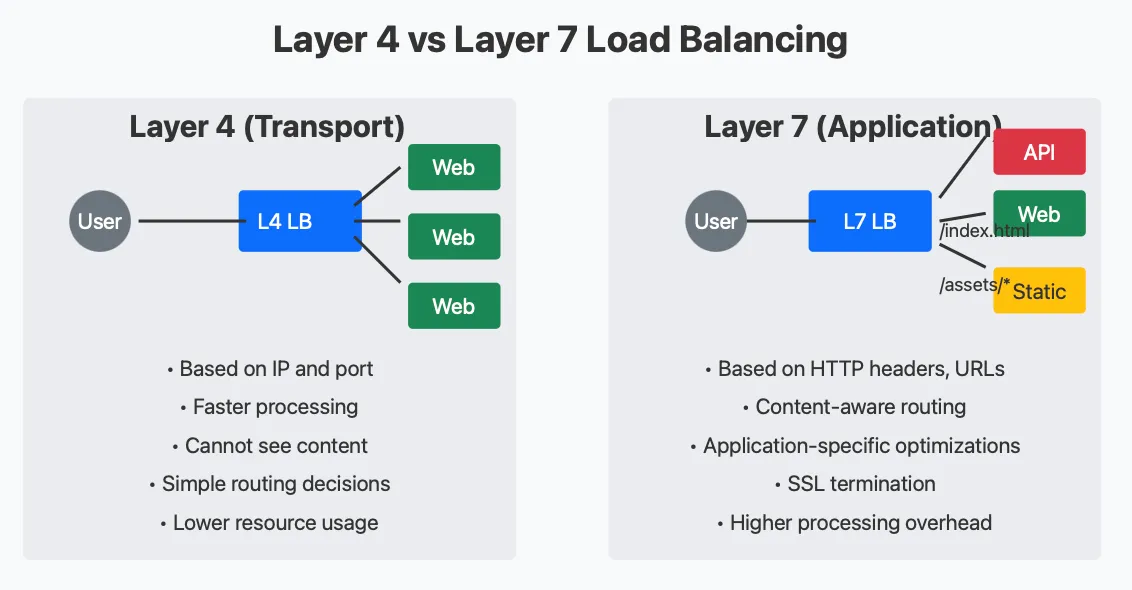

Layer 4 vs. Layer 7 Load Balancing

Load balancers operate at different layers of the OSI network model, with important distinctions:

Layer 4 vs Layer 7 Load Balancing